Automating Boring WFM Stuff

- Tesfahun Tegene Boshe

- Sep 16, 2022

- 4 min read

Updated: Sep 17, 2022

Introduction

Workforce Management encompasses a large scope of roles. Data gathering and analysis, forecasting, capacity planning, scheduling and real-time analysis are the most common ones among them. While whether or not you like the roles depends on personal taste and your experience level, some of the daily tasks in any one of the roles can be repetitive, laborious and therefore, boring. The good news, however, is that anything you do with a computer can be automated. In this blog, I will introduce some some automation tools and techniques that are very popular in the task automation world. This is a very brief introduction to a very wide world. I will provide supporting links in every section so that you can learn more at least until I go through each section separately in the upcoming blogs.

Imagine you having to download the same report for all your agents separately. It can get worse if you have to do that day by day. This task is basically one big loop of changing the agent name and date, short waiting time, and then pressing the download button. It literally involves some mouse clicks and 2 keyboard text insertions. Here are some Python and R solutions which can do that and many more.

Automating mouse clicks and keyboard press

1. Using Python

Click here for a quick introduction to Python.

pyautogui is a well known python module for mouse and keyboard automation. This module is not preloaded with Python. So to install it run the following command on the terminal once you have Python installed on your device.

Some use cases are

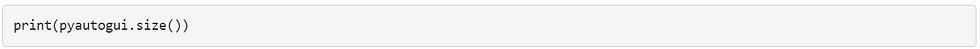

a. Get Screen resolution

Screen resolution is defined by coordinates X, Y. Resolution size of 2000X1000 means that the screen is 2000 pixels wide and 1000 pixels tall. The pixel on top left corner is pixel (0,0) and the last pixel is at the bottom right corner. Every other position is between those two

b. Get current position of the mouse

c. Click

d. Scroll

e. Move the mouse

f. Keyboard type string

g. Press Hotkeys

h. Screenshot

‘time.sleep()’ from ‘time’ library can be used for waiting time between actions.

There is a lot more you can do with this library says the official documentation.

2. Using R

Click here for a quick R tutorial.

If R is your favorite language like it is for me, KeyboardSimulator package is what you need for this.

You will need to install and load the package.

a. Mouse click

b. Get mouse cursor location

c. Mouse move

d. Keyboard press

e. Keyboard type string

Use ‘Sys.sleep(time)’ to suspend execution for a time interval (time in seconds).

Automating Data Mining - Web Scraping

Real Time Analysts need to constantly monitor queues a web page or many webpages and send queue updates through a communication channel to agents and their supervisors. Forecasting analysts sometimes go through multiple web pages to gather historical data. Creating daily, weekly, monthly reports can take hours of browsing. Here are the web scraping solutions you can easily apply to your problem.

Web scraping is an automated process of gathering information from the websites. Websites are built with HTML, an abbreviation for HyperText Markup Language, sometimes assisted by technologies such as CSS, cascading style sheets. HTML is a “tagging” language. The tags tell the web browsers how to display things. It is important to understand the basics of HTML to be able to inspect your website which is a key part of web scraping. Check this tutorial for a quick introduction to HTML and this for web element inspection on your favorite browser.

Some websites don’t like it when automatic scrapers gather their data. Robots exclusion standard is how websites communicate with web crawlers and other web robots. This is used mainly to avoid overloading your site with requests. Your request to certain URLs is either allowed or disallowed based on what is in robots.txt file of the website. Here is Facebook’s robots.txt file. It’s necessary to abide by the

With that you should be ready for the what is coming next.

1. Using Python

Click here for a quick introduction to Python.

A. Beautiful Soup

Beautiful soup is a python library which is great for scraping static websites. A static website is one with stable content, where every user sees the exact same thing on each individual page. On the other hand, allows its content to change with the user and therefore require login. Wikipedia is an example of static webpages.

To install Beautiful Soup, run this command on the terminal.

Use ‘requests’ library to request the html from the website.

Parse the html using html parser of beautifulsoup4 library.

There is so much you can do with the parsed html. Check out the documentation here.

B. Selenium

Unlike Beautiful Soup, Selenium is not a Python library. It’s rather a framework for a range of tools and libraries that enable and support the automation of web browsers. Selenium can scrap both static and dynamic webpages. It can be used with various programming languages including Python. At the core of Selenium is WebDriver, an interface to write instruction sets that can be run interchangeably in many browsers. We will need to install both Selenium and the WebDriver if we have not already done that.

To install Selenium

This is how you would get a webpage using selenium.

Read the documentation here for a whole lot of things you can do with Selenium.

2. Using R

R is another commonly used language for web scraping. RVest, Rselenium and RCrawler are some of the packages dedicated for web scraping.

Automating Messaging (Bots)

Sending repetitive emails or chat messages can be time consuming and boring. While automating this can be done with one or combination of the above techniques, many commercial messaging platforms such as Slack, Microsoft Teams, Gmail provide APIs that can make it easier for you.

I hope this blog inspires you to automate some of your routine tasks. I am crazy about applying data science skills to workforce management. Reach me at LinkedIn if you wish to connect :)

Commentaires